You are looking at the documentation of a prior release. To read the documentation of the latest release, please

visit here.

New to KubeDB? Please start here.

Using Prometheus (CoreOS operator) with KubeDB

This tutorial will show you how to monitor Elasticsearch database using Prometheus via CoreOS Prometheus Operator.

Before You begin

At first, you need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. If you do not already have a cluster, you can create one by using Minikube.

Now, install KubeDB cli on your workstation and KubeDB operator in your cluster following the steps here.

To keep things isolated, this tutorial uses a separate namespace called demo throughout this tutorial.

$ kubectl create ns demo

namespace "demo" created

$ kubectl get ns demo

NAME STATUS AGE

demo Active 5s

Note: Yaml files used in this tutorial are stored in docs/examples/elasticsearch folder in GitHub repository kubedb/cli.

This tutorial assumes that you are familiar with Elasticsearch concept.

Deploy CoreOS-Prometheus Operator

Run the following command to deploy CoreOS-Prometheus operator.

$ kubectl create -f https://raw.githubusercontent.com/kubedb/cli/0.9.0/docs/examples/monitoring/coreos-operator/demo-0.yaml

namespace/demo configured

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

serviceaccount/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.extensions/prometheus-operator created

Wait for running the Deployment’s Pods.

$ kubectl get pods -n demo --selector=operator=prometheus

NAME READY STATUS RESTARTS AGE

prometheus-operator-857455484c-mbzsp 1/1 Running 0 57s

This CoreOS-Prometheus operator will create some supported Custom Resource Definition (CRD).

$ kubectl get crd

NAME CREATED AT

...

alertmanagers.monitoring.coreos.com 2018-10-08T12:53:46Z

prometheuses.monitoring.coreos.com 2018-10-08T12:53:46Z

servicemonitors.monitoring.coreos.com 2018-10-08T12:53:47Z

...

Once the Prometheus CRDs are registered, run the following command to create a Prometheus.

$ kubectl create -f https://raw.githubusercontent.com/kubedb/cli/0.9.0/docs/examples/monitoring/coreos-operator/demo-1.yaml

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

prometheus.monitoring.coreos.com/prometheus created

service/prometheus created

Verify RBAC stuffs

$ kubectl get clusterroles

NAME AGE

...

prometheus 28s

prometheus-operator 10m

...

$ kubectl get clusterrolebindings

NAME AGE

...

prometheus 2m

prometheus-operator 11m

...

Prometheus Dashboard

Now open prometheus dashboard on browser by running minikube service prometheus -n demo.

Or you can get the URL of prometheus Service by running following command

$ minikube service prometheus -n demo --url

http://192.168.99.100:30900

If you are not using minikube, browse prometheus dashboard using following address http://{Node's ExternalIP}:{NodePort of prometheus-service}.

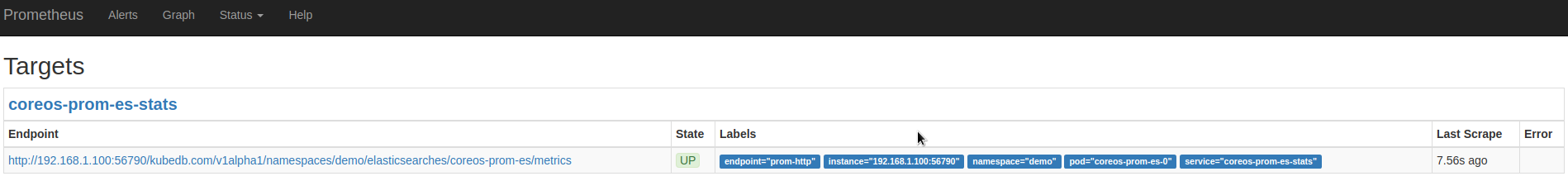

Now, if you go to the Prometheus Dashboard, you will see that target list is now empty.

Find out required label for ServiceMonitor

First, check created objects of Prometheus kind.

$ kubectl get prometheus --all-namespaces

NAMESPACE NAME AGE

demo prometheus 20m

Now if we see the full spec of prometheus of Prometheus kind, we will see a field called serviceMonitorSelector. The value of matchLabels under serviceMonitorSelector part, is the required label for KubeDB monitoring spec monitor.prometheus.labels.

$ kubectl get prometheus -n demo prometheus -o yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

creationTimestamp: 2018-11-15T10:40:57Z

generation: 1

name: prometheus

namespace: demo

resourceVersion: "1661"

selfLink: /apis/monitoring.coreos.com/v1/namespaces/demo/prometheuses/prometheus

uid: ef59e6e6-e8c2-11e8-8e44-08002771fd7b

spec:

resources:

requests:

memory: 400Mi

serviceAccountName: prometheus

serviceMonitorSelector:

matchLabels:

app: kubedb

version: v1.7.0

In this tutorial, the required label is app: kubedb.

Monitor Elasticsearch with CoreOS Prometheus

Below is the Elasticsearch object created in this tutorial.

apiVersion: kubedb.com/v1alpha1

kind: Elasticsearch

metadata:

name: coreos-prom-es

namespace: demo

spec:

version: "6.3-v1"

storage:

storageClassName: "standard"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Mi

monitor:

agent: prometheus.io/coreos-operator

prometheus:

namespace: demo

labels:

app: kubedb

interval: 10s

Here,

monitor.agentindicates the monitoring agent. Currently only valid value currently iscoreos-prometheus-operatormonitor.prometheusspecifies the information for monitoring by prometheusprometheus.namespacespecifies the namespace where ServiceMonitor is created.prometheus.labelsspecifies the labels applied to ServiceMonitor.prometheus.portindicates the port for Elasticsearch exporter endpoint (default is56790)prometheus.intervalindicates the scraping interval (eg, ’10s')

Now create this Elasticsearch object with monitoring spec

$ kubectl create -f https://raw.githubusercontent.com/kubedb/cli/0.9.0/docs/examples/elasticsearch/monitoring/coreos-prom-es.yaml

elasticsearch.kubedb.com/coreos-prom-es created

KubeDB operator will create a ServiceMonitor object once the Elasticsearch is successfully running.

$ kubectl get es -n demo coreos-prom-es

NAME VERSION STATUS AGE

coreos-prom-es 6.3-v1 Running 1m

You can verify it running the following commands

$ kubectl get servicemonitor -n demo --selector="app=kubedb"

NAME AGE

kubedb-demo-coreos-prom-es 1m

Now, if you go the Prometheus Dashboard, you will see this database endpoint in target list.

Cleaning up

To cleanup the Kubernetes resources created by this tutorial, run following commands

$ kubectl patch -n demo es/coreos-prom-es -p '{"spec":{"terminationPolicy":"WipeOut"}}' --type="merge"

$ kubectl delete -n demo es/coreos-prom-es

$ kubectl delete -n demo deployment/prometheus-operator

$ kubectl delete -n demo service/prometheus

$ kubectl delete -n demo service/prometheus-operated

$ kubectl delete -n demo statefulset.apps/prometheus-prometheus

$ kubectl delete clusterrolebindings prometheus-operator prometheus

$ kubectl delete clusterrole prometheus-operator prometheus

$ kubectl delete ns demo

namespace "demo" deleted

Next Steps

- Learn about taking instant backup of Elasticsearch database using KubeDB.

- Learn how to schedule backup of Elasticsearch database.

- Learn about initializing Elasticsearch with Snapshot.

- Learn how to configure Elasticsearch Topology.

- Monitor your Elasticsearch database with KubeDB using

out-of-the-boxbuiltin-Prometheus. - Detail concepts of Elasticsearch object.

- Detail concepts of Snapshot object.

- Use private Docker registry to deploy Elasticsearch with KubeDB.

- Want to hack on KubeDB? Check our contribution guidelines.