You are looking at the documentation of a prior release. To read the documentation of the latest release, please

visit here.

New to KubeDB? Please start here.

KubeDB Snapshot

KubeDB operator maintains another Custom Resource Definition (CRD) for database backups called Snapshot. Snapshot object is used to take backup or restore from a backup.

Before You Begin

At first, you need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. If you do not already have a cluster, you can create one by using kind.

Now, install KubeDB cli on your workstation and KubeDB operator in your cluster following the steps here.

To keep things isolated, this tutorial uses a separate namespace called demo throughout this tutorial.

$ kubectl create ns demo

namespace "demo" created

$ kubectl get ns demo

NAME STATUS AGE

demo Active 5s

Note: YAML files used in this tutorial are stored in docs/examples/elasticsearch folder in GitHub repository kubedb/docs.

Prepare Database

We need an Elasticsearch object in Running phase to perform backup operation. If you do not already have an Elasticsearch instance running, create one first.

$ kubectl create -f https://github.com/kubedb/docs/raw/v2020.07.10-beta.0/docs/examples/elasticsearch/quickstart/instant-elasticsearch.yaml

elasticsearch "instant-elasticsearch" created

Below the YAML for the Elasticsearch crd we have created above.

apiVersion: kubedb.com/v1alpha1

kind: Elasticsearch

metadata:

name: instant-elasticsearch

namespace: demo

spec:

version: 7.3.2

replicas: 1

storageType: Ephemeral

Here, we have used spec.storageType: Ephemeral. So, we don’t need to specify storage section. KubeDB will use emptyDir volume for this database.

Verify that the Elasticsearch is running,

$ kubectl get es -n demo instant-elasticsearch

NAME VERSION STATUS AGE

instant-elasticsearch 7.3.2 Running 41s

Populate database

Let’s insert some data so that we can verify that the snapshot contains those data.

Connection information:

Address:

localhost:9200Username: Run following command to get username

$ kubectl get secrets -n demo instant-elasticsearch-auth -o jsonpath='{.data.\ADMIN_USERNAME}' | base64 -d elasticPassword: Run following command to get password

$ kubectl get secrets -n demo instant-elasticsearch-auth -o jsonpath='{.data.\ADMIN_PASSWORD}' | base64 -d dy76ez7v

Let’s forward 9200 port of our database pod. Run following command on a separate terminal,

$ kubectl port-forward -n demo instant-elasticsearch-0 9200

Forwarding from 127.0.0.1:9200 -> 9200

Forwarding from [::1]:9200 -> 9200

Now let’s check health of our Elasticsearch database.

$ curl --user "elastic:dy76ez7v" "localhost:9200/_cluster/health?pretty"

{

"cluster_name" : "instant-elasticsearch",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

$ curl -XPUT --user "elastic:dy76ez7v" "localhost:9200/test/snapshot2/2?pretty" -H 'Content-Type: application/json' -d' {

"title": "Snapshot",

"text": "Testing instand backup",

"date": "2018/02/13"

}'

{

"_index" : "test",

"_type" : "snapshot",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

$ curl -XGET --user "elastic:dy76ez7v" "localhost:9200/test/snapshot/1?pretty"

{

"_index" : "test",

"_type" : "snapshot",

"_id" : "1",

"_version" : 1,

"_seq_no" : 1,

"_primary_term" : 1,

"found" : true,

"_source" : {

"title" : "Snapshot",

"text" : "Testing instand backup",

"date" : "2018/02/13"

}

}

Now, we are ready to take backup of this database instant-elasticsearch.

Instant backup

Snapshot provides a declarative configuration for backup behavior in a Kubernetes native way.

Below is the Snapshot object created in this tutorial.

apiVersion: kubedb.com/v1alpha1

kind: Snapshot

metadata:

name: instant-snapshot

namespace: demo

labels:

kubedb.com/kind: Elasticsearch

spec:

databaseName: instant-elasticsearch

storageSecretName: gcs-secret

gcs:

bucket: kubedb

Here,

metadata.labelsshould include the type of database.spec.databaseNameindicates the Elasticsearch object name,instant-elasticsearch, whose snapshot is taken.spec.storageSecretNamepoints to the Secret containing the credentials for snapshot storage destination.spec.gcs.bucketpoints to the bucket name used to store the snapshot data.

In this case, kubedb.com/kind: Elasticsearch tells KubeDB operator that this Snapshot belongs to an Elasticsearch object. Only Elasticsearch controller will handle this Snapshot object.

Note: Snapshot and Secret objects must be in the same namespace as Elasticsearch,

instant-elasticsearch.

Snapshot Storage Secret

Storage Secret should contain credentials that will be used to access storage destination. In this tutorial, snapshot data will be stored in a Google Cloud Storage (GCS) bucket.

For that a storage Secret is needed with following 2 keys:

| Key | Description |

|---|---|

GOOGLE_PROJECT_ID | Required. Google Cloud project ID |

GOOGLE_SERVICE_ACCOUNT_JSON_KEY | Required. Google Cloud service account json key |

$ echo -n '<your-project-id>' > GOOGLE_PROJECT_ID

$ mv downloaded-sa-json.key > GOOGLE_SERVICE_ACCOUNT_JSON_KEY

$ kubectl create secret -n demo generic gcs-secret \

--from-file=./GOOGLE_PROJECT_ID \

--from-file=./GOOGLE_SERVICE_ACCOUNT_JSON_KEY

secret "gcs-secret" created

$ kubectl get secret -n demo gcs-secret -o yaml

apiVersion: v1

data:

GOOGLE_PROJECT_ID: PHlvdXItcHJvamVjdC1pZD4=

GOOGLE_SERVICE_ACCOUNT_JSON_KEY: ewogICJ0eXBlIjogInNlcnZpY2VfYWNjb3V...9tIgp9Cg==

kind: Secret

metadata:

creationTimestamp: 2018-02-13T06:35:36Z

name: gcs-secret

namespace: demo

resourceVersion: "4308"

selfLink: /api/v1/namespaces/demo/secrets/gcs-secret

uid: 19a77054-1088-11e8-9e42-0800271bdbb6

type: Opaque

Snapshot storage backend

KubeDB supports various cloud providers (S3, GCS, Azure, OpenStack Swift and/or locally mounted volumes) as snapshot storage backend. In this tutorial, GCS backend is used.

To configure this backend, following parameters are available:

| Parameter | Description |

|---|---|

spec.gcs.bucket | Required. Name of bucket |

spec.gcs.prefix | Optional. Path prefix into bucket where snapshot data will be stored |

An open source project osm is used to store snapshot data into cloud.

To learn how to configure other storage destinations for snapshot data, please visit here.

Now, create the Snapshot object.

$ kubectl create -f https://github.com/kubedb/docs/raw/v2020.07.10-beta.0/docs/examples/elasticsearch/snapshot/instant-snapshot.yaml

snapshot.kubedb.com/instant-snapshot created

Let’s see Snapshot list of Elasticsearch instant-elasticsearch.

$ kubectl get snap -n demo --selector=kubedb.com/kind=Elasticsearch,kubedb.com/name=instant-elasticsearch

NAME DATABASENAME STATUS AGE

instant-snapshot instant-elasticsearch Succeeded 26s

KubeDB operator watches for Snapshot objects using Kubernetes API. When a Snapshot object is created, it will launch a Job that runs the elasticdump command and uploads the output files to cloud storage using osm.

Snapshot data is stored in a folder called {bucket}/{prefix}/kubedb/{namespace}/{elasticsearch}/{snapshot}/.

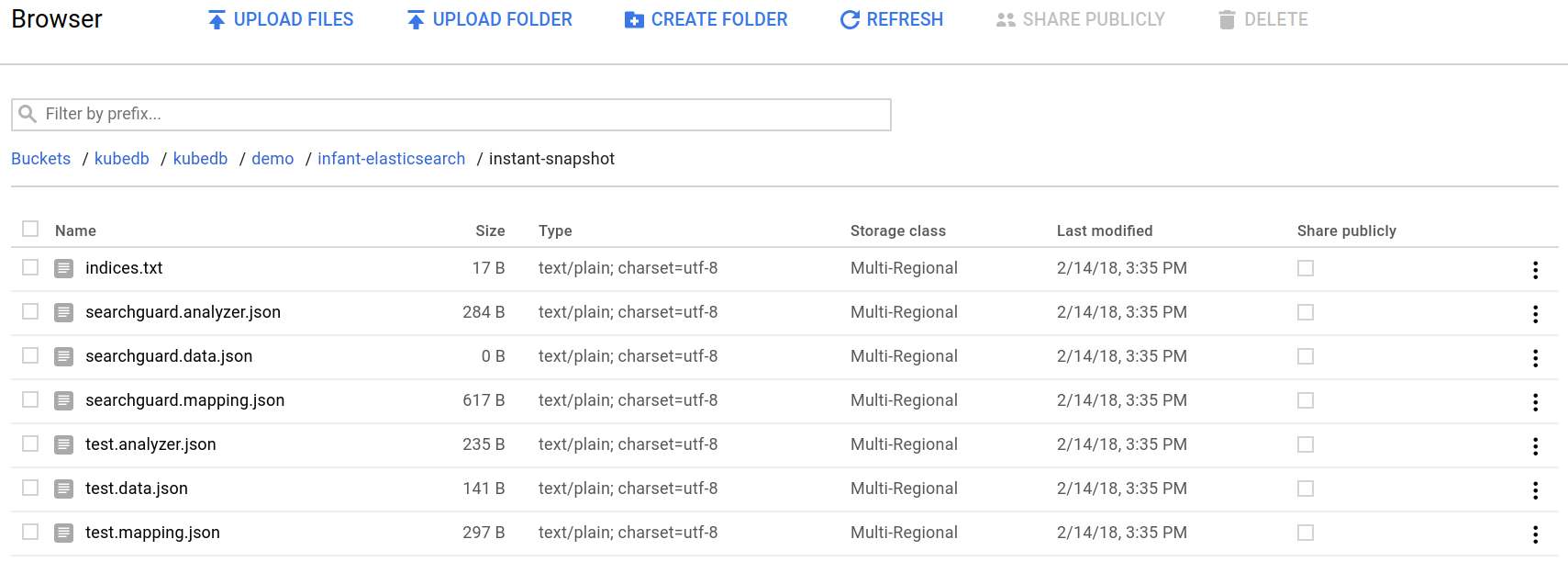

Once the snapshot Job is completed, you can see the output of the elasticdump command stored in the GCS bucket.

From the above image, you can see that the snapshot data files for index test are stored in your bucket.

If you open this test.data.json file, you will see the data you have created previously.

{

"_index":"test",

"_type":"snapshot",

"_id":"1",

"_score":1,

"_source":{

"title":"Snapshot",

"text":"Testing instand backup",

"date":"2018/02/13"

}

}

Let’s see the Snapshot list for Elasticsearch instant-elasticsearch by running kubectl dba describe command.

$ kubectl dba describe es -n demo instant-elasticsearch

Name: instant-elasticsearch

Namespace: demo

CreationTimestamp: Wed, 02 Oct 2019 11:26:44 +0600

Labels: <none>

Annotations: <none>

Status: Running

Replicas: 1 total

StorageType: Ephemeral

No volumes.

StatefulSet:

Name: instant-elasticsearch

CreationTimestamp: Wed, 02 Oct 2019 11:26:45 +0600

Labels: app.kubernetes.io/component=database

app.kubernetes.io/instance=instant-elasticsearch

app.kubernetes.io/managed-by=kubedb.com

app.kubernetes.io/name=elasticsearch

app.kubernetes.io/version=7.3.2

kubedb.com/kind=Elasticsearch

kubedb.com/name=instant-elasticsearch

node.role.client=set

node.role.data=set

node.role.master=set

Annotations: <none>

Replicas: 824638718776 desired | 1 total

Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed

Service:

Name: instant-elasticsearch

Labels: app.kubernetes.io/component=database

app.kubernetes.io/instance=instant-elasticsearch

app.kubernetes.io/managed-by=kubedb.com

app.kubernetes.io/name=elasticsearch

app.kubernetes.io/version=7.3.2

kubedb.com/kind=Elasticsearch

kubedb.com/name=instant-elasticsearch

Annotations: <none>

Type: ClusterIP

IP: 10.0.1.145

Port: http 9200/TCP

TargetPort: http/TCP

Endpoints: 10.4.0.19:9200

Service:

Name: instant-elasticsearch-master

Labels: app.kubernetes.io/component=database

app.kubernetes.io/instance=instant-elasticsearch

app.kubernetes.io/managed-by=kubedb.com

app.kubernetes.io/name=elasticsearch

app.kubernetes.io/version=7.3.2

kubedb.com/kind=Elasticsearch

kubedb.com/name=instant-elasticsearch

Annotations: <none>

Type: ClusterIP

IP: 10.0.1.247

Port: transport 9300/TCP

TargetPort: transport/TCP

Endpoints: 10.4.0.19:9300

Database Secret:

Name: instant-elasticsearch-auth

Labels: app.kubernetes.io/component=database

app.kubernetes.io/instance=instant-elasticsearch

app.kubernetes.io/managed-by=kubedb.com

app.kubernetes.io/name=elasticsearch

app.kubernetes.io/version=7.3.2

kubedb.com/kind=Elasticsearch

kubedb.com/name=instant-elasticsearch

Annotations: <none>

Type: Opaque

Data

====

ADMIN_PASSWORD: 8 bytes

ADMIN_USERNAME: 7 bytes

Certificate Secret:

Name: instant-elasticsearch-cert

Labels: app.kubernetes.io/component=database

app.kubernetes.io/instance=instant-elasticsearch

app.kubernetes.io/managed-by=kubedb.com

app.kubernetes.io/name=elasticsearch

app.kubernetes.io/version=7.3.2

kubedb.com/kind=Elasticsearch

kubedb.com/name=instant-elasticsearch

Annotations: <none>

Type: Opaque

Data

====

client.jks: 3053 bytes

key_pass: 6 bytes

node.jks: 3014 bytes

root.jks: 863 bytes

root.pem: 1139 bytes

Topology:

Type Pod StartTime Phase

---- --- --------- -----

master|client|data instant-elasticsearch-0 2019-10-02 11:26:45 +0600 +06 Running

Snapshots:

Name Bucket StartTime CompletionTime Phase

---- ------ --------- -------------- -----

instant-snapshot gs:kubedb-qa Wed, 02 Oct 2019 11:38:19 +0600 Wed, 02 Oct 2019 11:38:37 +0600 Succeeded

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Successful 12m Elasticsearch operator Successfully created Service

Normal Successful 12m Elasticsearch operator Successfully created Service

Normal Successful 12m Elasticsearch operator Successfully created StatefulSet

Normal Successful 11m Elasticsearch operator Successfully created Elasticsearch

Normal Successful 11m Elasticsearch operator Successfully created appbinding

Normal Successful 11m Elasticsearch operator Successfully patched StatefulSet

Normal Successful 11m Elasticsearch operator Successfully patched Elasticsearch

Normal Starting 49s Elasticsearch operator Backup running

Normal SuccessfulSnapshot 31s Elasticsearch operator Successfully completed snapshot

From the above output, we can see in Snapshots: section that we have one successful snapshot.

Delete Snapshot

If you want to delete snapshot data from storage, you can delete Snapshot object.

$ kubectl delete snap -n demo instant-snapshot

snapshot "instant-snapshot" deleted

Once Snapshot object is deleted, you can’t revert this process and snapshot data from storage will be deleted permanently.

Customizing Snapshot

You can customize pod template spec and volume claim spec for backup and restore jobs. For details options read this doc.

Some common customization examples are shown below:

Specify PVC Template:

Backup and recovery jobs use temporary storage to hold dump files before it can be uploaded to cloud backend or restored into database. By default, KubeDB reads storage specification from spec.storage section of database crd and creates a PVC with similar specification for backup or recovery job. However, if you want to specify a custom PVC template, you can do it via spec.podVolumeClaimSpec field of Snapshot crd. This is particularly helpful when you want to use different storageclass for backup or recovery jobs and the database.

apiVersion: kubedb.com/v1alpha1

kind: Snapshot

metadata:

name: instant-snapshot

namespace: demo

labels:

kubedb.com/kind: Elasticsearch

spec:

databaseName: instant-elasticsearch

storageSecretName: gcs-secret

gcs:

bucket: kubedb-dev

podVolumeClaimSpec:

storageClassName: "standard"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi # make sure size is larger or equal than your database size

Specify Resources for Backup/Recovery Jobs:

You can specify resources for backup or recovery jobs using spec.podTemplate.spec.resources field.

apiVersion: kubedb.com/v1alpha1

kind: Snapshot

metadata:

name: instant-snapshot

namespace: demo

labels:

kubedb.com/kind: Elasticsearch

spec:

databaseName: instant-elasticsearch

storageSecretName: gcs-secret

gcs:

bucket: kubedb-dev

podTemplate:

spec:

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

Provide Annotations for Backup/Recovery Jobs:

If you need to add some annotations to backup or recovery jobs, you can specify those in spec.podTemplate.controller.annotations. You can also specify annotations for the pod created by backup or recovery jobs through spec.podTemplate.annotations field.

apiVersion: kubedb.com/v1alpha1

kind: Snapshot

metadata:

name: instant-snapshot

namespace: demo

labels:

kubedb.com/kind: Elasticsearch

spec:

databaseName: instant-elasticsearch

storageSecretName: gcs-secret

gcs:

bucket: kubedb-dev

podTemplate:

annotations:

passMe: ToBackupJobPod

controller:

annotations:

passMe: ToBackupJob

Pass Arguments to Backup/Recovery Job:

KubeDB allows users to pass extra arguments for backup or recovery jobs. You can provide these arguments through spec.podTemplate.spec.args field of Snapshot crd.

apiVersion: kubedb.com/v1alpha1

kind: Snapshot

metadata:

name: instant-snapshot

namespace: demo

labels:

kubedb.com/kind: Elasticsearch

spec:

databaseName: instant-elasticsearch

storageSecretName: gcs-secret

gcs:

bucket: kubedb-dev

podTemplate:

spec:

args:

- --extra-args-to-backup-command

Cleaning up

To cleanup the Kubernetes resources created by this tutorial, run:

kubectl patch -n demo es/instant-elasticsearch -p '{"spec":{"terminationPolicy":"WipeOut"}}' --type="merge"

kubectl delete -n demo es/instant-elasticsearch

kubectl delete ns demo

Next Steps

- See the list of supported storage providers for snapshots here.

- Learn how to schedule backup of Elasticsearch database.

- Learn about initializing Elasticsearch with Snapshot.

- Learn how to configure Elasticsearch Topology.

- Monitor your Elasticsearch database with KubeDB using

out-of-the-boxbuiltin-Prometheus. - Monitor your Elasticsearch database with KubeDB using

out-of-the-boxCoreOS Prometheus Operator. - Use private Docker registry to deploy Elasticsearch with KubeDB.

- Want to hack on KubeDB? Check our contribution guidelines.